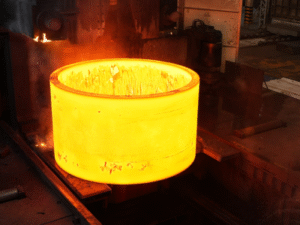

High-temperature alloys are essential materials used in extreme environments such as gas turbines, aerospace components, and industrial furnaces. These materials must maintain their mechanical properties and structural integrity when exposed to elevated temperatures for extended periods. One of the most critical degradation mechanisms affecting these alloys is oxidation, which can lead to material failure and reduced component lifespan. Therefore, oxidation resistance testing plays a vital role in evaluating and developing high-performance alloys.  The oxidation process in high-temperature alloys involves the reaction between metal atoms and oxygen at elevated temperatures, forming oxide layers on the surface. The nature and growth rate of these oxide layers determine the alloy’s resistance to further oxidation. Testing methods vary depending on the application requirements and the specific degradation mechanisms being evaluated. Thermogravimetric analysis (TGA) is one of the most widely used techniques for oxidation resistance testing. This method measures weight changes in a sample as it is exposed to high temperatures in a controlled atmosphere environment. The weight gain due to oxidation is recorded as a function of time, allowing researchers to calculate oxidation kinetics and determine the protective nature of the formed oxide layer. Cyclic oxidation testing provides valuable information about the spalling behavior of oxide layers, which is critical for applications involving thermal cycling. In this test, samples are subjected to repeated cycles of heating and cooling, simulating real-world operating conditions. The weight change after each cycle is recorded, and the oxide layer is examined for adhesion and protective qualities. Long-term exposure testing involves maintaining samples at constant high temperatures for extended periods, sometimes lasting thousands of hours. This method provides the most realistic assessment of oxidation behavior but requires significant time and resources. The test environment, including temperature, atmosphere composition, and gas flow rate, must be carefully controlled to ensure reproducible results. Testing parameters are selected based on the intended application conditions, with temperatures often ranging from 800°C to 1200°C for most high-temperature alloys. Evaluation of oxidation resistance involves multiple factors beyond simple weight measurements. The thickness, composition, and adhesion of the oxide layer are critical indicators of protective capability. Advanced characterization techniques such as scanning electron microscopy (SEM), X-ray diffraction (XRD), and energy-dispersive X-ray spectroscopy (EDX) are used to analyze the oxide structure and composition. The results from oxidation resistance testing provide valuable data for alloy development. By understanding how different alloying elements affect oxidation behavior, researchers can design materials with improved resistance to high-temperature degradation. Elements such as chromium, aluminum, and silicon are known to enhance oxidation resistance by forming protective oxide layers. The testing data also helps establish service life predictions for components operating in harsh environments, enabling more reliable design and maintenance schedules.

The oxidation process in high-temperature alloys involves the reaction between metal atoms and oxygen at elevated temperatures, forming oxide layers on the surface. The nature and growth rate of these oxide layers determine the alloy’s resistance to further oxidation. Testing methods vary depending on the application requirements and the specific degradation mechanisms being evaluated. Thermogravimetric analysis (TGA) is one of the most widely used techniques for oxidation resistance testing. This method measures weight changes in a sample as it is exposed to high temperatures in a controlled atmosphere environment. The weight gain due to oxidation is recorded as a function of time, allowing researchers to calculate oxidation kinetics and determine the protective nature of the formed oxide layer. Cyclic oxidation testing provides valuable information about the spalling behavior of oxide layers, which is critical for applications involving thermal cycling. In this test, samples are subjected to repeated cycles of heating and cooling, simulating real-world operating conditions. The weight change after each cycle is recorded, and the oxide layer is examined for adhesion and protective qualities. Long-term exposure testing involves maintaining samples at constant high temperatures for extended periods, sometimes lasting thousands of hours. This method provides the most realistic assessment of oxidation behavior but requires significant time and resources. The test environment, including temperature, atmosphere composition, and gas flow rate, must be carefully controlled to ensure reproducible results. Testing parameters are selected based on the intended application conditions, with temperatures often ranging from 800°C to 1200°C for most high-temperature alloys. Evaluation of oxidation resistance involves multiple factors beyond simple weight measurements. The thickness, composition, and adhesion of the oxide layer are critical indicators of protective capability. Advanced characterization techniques such as scanning electron microscopy (SEM), X-ray diffraction (XRD), and energy-dispersive X-ray spectroscopy (EDX) are used to analyze the oxide structure and composition. The results from oxidation resistance testing provide valuable data for alloy development. By understanding how different alloying elements affect oxidation behavior, researchers can design materials with improved resistance to high-temperature degradation. Elements such as chromium, aluminum, and silicon are known to enhance oxidation resistance by forming protective oxide layers. The testing data also helps establish service life predictions for components operating in harsh environments, enabling more reliable design and maintenance schedules.

- +86-18921275456

- رقم 21 Zhihui Road, Huishan District, Wuxi, Jiangsu, China

ابق على تواصل معنا - اشترك في نشرتنا الإخبارية

نبذة عنا

بعد سنوات من التطوير، أقمنا علاقات تعاون طويلة الأمد ومستقرة مع العديد من المواد المحلية والأجنبية ...

معلومات الاتصال

- الهاتف المحمول +86-18921275456

- Whatsapp:8618921275456

- العنوان: لا. 21 Zhihui Road, Huishan District, Wuxi, Jiangsu, China